ML-Safe AV Video Data Processing Achieves Up to 50% Storage Reduction

ML-Safe Testing Series, Part 2: Benchmark testing shows how AV developers can achieve greater savings across storage, networking and compute…

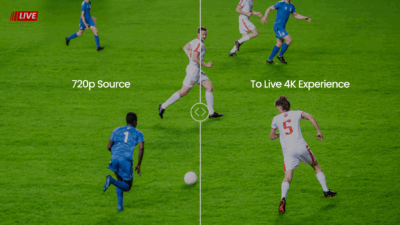

From 720p to 4K in Real-Time: CDN-Friendly Video Pipeline Powered by NVIDIA

Live sports broadcasters can deliver 4K experiences from 720p sources. The high-efficiency solution enables to reduce CDN costs by up to 50%…

Unlocking New Value from Media Archives with Modernized and Optimized Cloud Migration

The Challenge: A Decades-Old Burden For tier-1 broadcasters, a major constraint is their decades-old media archive, often spanning hundreds of…

Beamr is Pushing the Boundaries of AV Data Efficiency, Accelerated by NVIDIA

Designed to protect visual fidelity, Beamr’s solution helps address the infrastructure challenges associated with autonomous vehicle…

How Content-Adaptive Video Compression Tackles Autonomous Vehicle Data Explosion

By Ronen Nissim and Tamar Shoham Overview The explosion of video data in autonomous vehicles (AVs) systems presents a critical challenge to…

Is the Future of Video Processing Destined for GPU?

My Journey Through the Evolution of Video Processing: From Low-Quality Streaming to HD and 4K Becoming a Commodity, and Now the AI-Powered…

The Video Codec Race to 2025: How AV1 is Driving New Possibilities

With numerous advantages, AV1 is now supported on about 60% of devices and all major web browsers. To accelerate its adoption – Beamr…

Live 4Kp60 Optimized Encoding with Beamr CABR and NVIDIA Holoscan for Media

This year at IBC 2024 in Amsterdam, we are excited to demonstrate Live 4K p60 optimized streaming with our Content-Adaptive Bitrate (CABR)…

Using Beamr Cloud Optimized AV1 Encodes for Machine Learning Tasks

Now available: Hardware accelerated, unsupervised, codec modernization to AV1 for increased efficiency video AI workflows AV1, the new kid on…

Beamr Now Offering Oracle Cloud Infrastructure Customers 30% Faster Video Optimization

Beamr’s Content Adaptive Bit Rate solution enables significantly decreasing video file size or bitrates without changing the video…