Is HEVC Ready for Prime Time?

You’re home from work, ready to kick back, relax and catch a new show or movie on Netflix – the service that streams over 90 million hours of…

It’s Getting Intense

Where two parties are fighting, can a third party win? I ended my previous post on a positive note, suggesting that media optimization could…

Is It Legit to JIT?

If you’re delivering OTT content or a TV Everywhere service, and looking for ways to reduce your expenses, Just-In-Time (JIT) encoding and…

1. 2. 3. Cheese

We live in an amazing time where with a smartphone alone, professional quality photos and videos are no longer limited to the exclusive…

Introducing Beamr Blogger – Eliezer (Eli) Lubitch, President

Hi, my name is Eliezer Lubitch, but everyone calls me Eli. As the President of Beamr, I basically think, eat, sleep, breath and even dream…

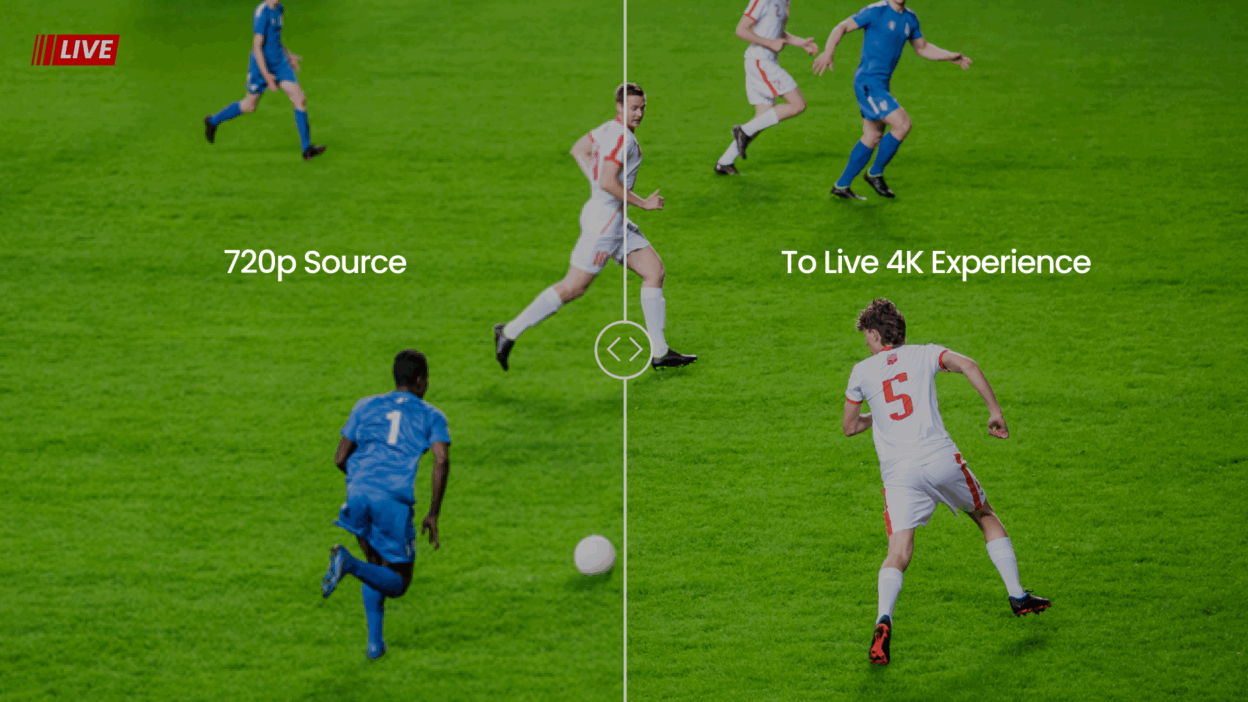

Why The Future of Sports Broadcasting Must Keep Pace with Live Streaming Adoption

Today’s sports fans expect digital availability everywhere and their viewing expectations are constantly rising. As fans have more choices to…

Whatever Happened to Mobile Broadcast TV?

10 years ago I was working as an independent consultant, specializing in mobile video technologies and services. I helped companies such as…

Live Streaming: It’s a Whole Different Ball Game

The market for OTT live streaming is not only on the rise, but is also becoming a larger part of the overall OTT streaming market in general.…

Beamr Video Cloud Service 101: How to Use the REST API

Introduction Cloud-based video optimization offers a solution that is cost-efficient, scalable and seamlessly integrated into a video…

Driving the Mobile Visual Communication Revolution

Beamr to Present JPEGmini at Mobile Photo Connect on September 29 As image technology experts, the crux of our corporate mission has been to…