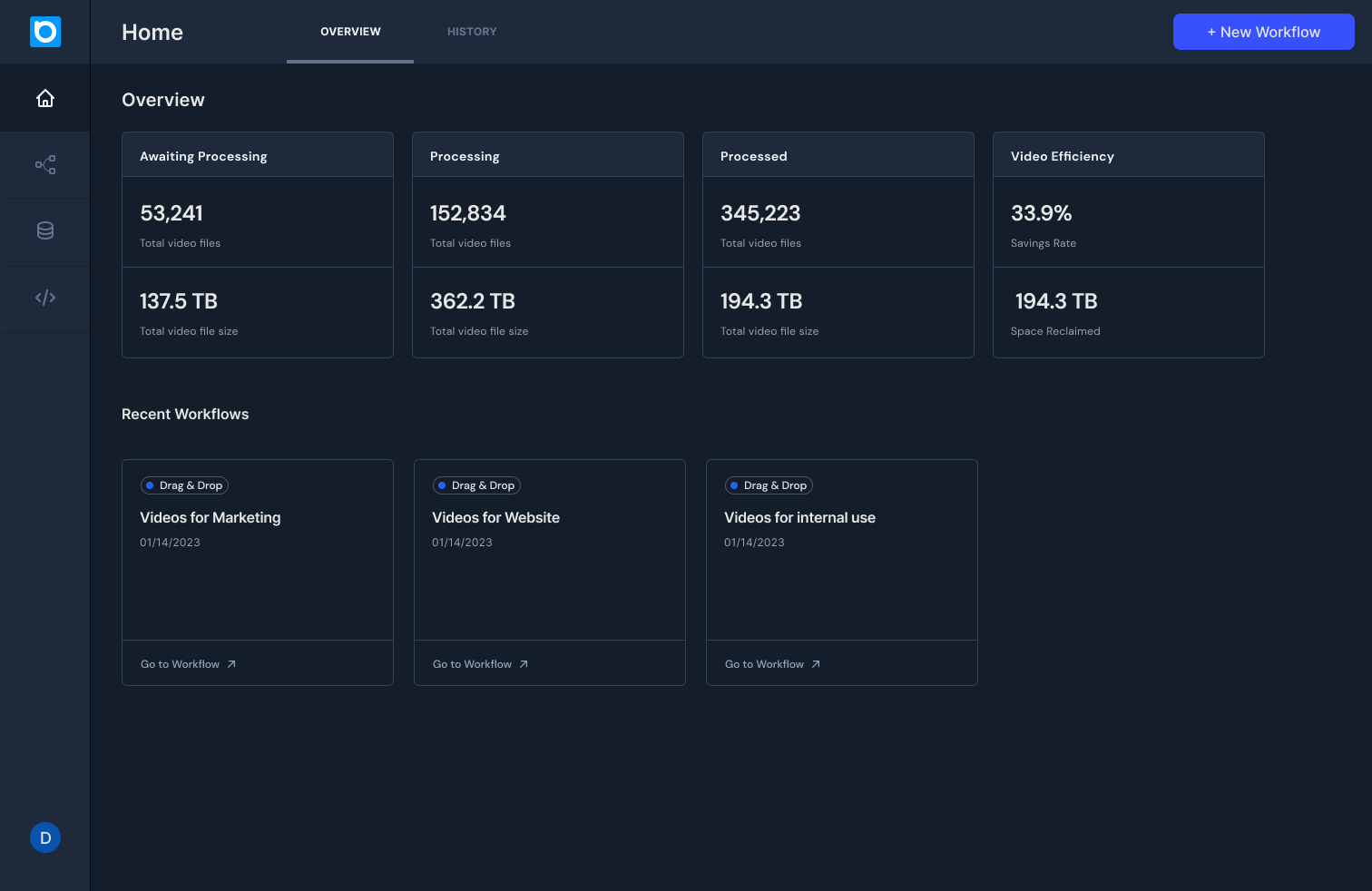

Beamr Cloud is live!

High Quality, High Scale, Low Cost video processing – Beamr Cloud is bringing technology that used to be exclusive to tech giants like…

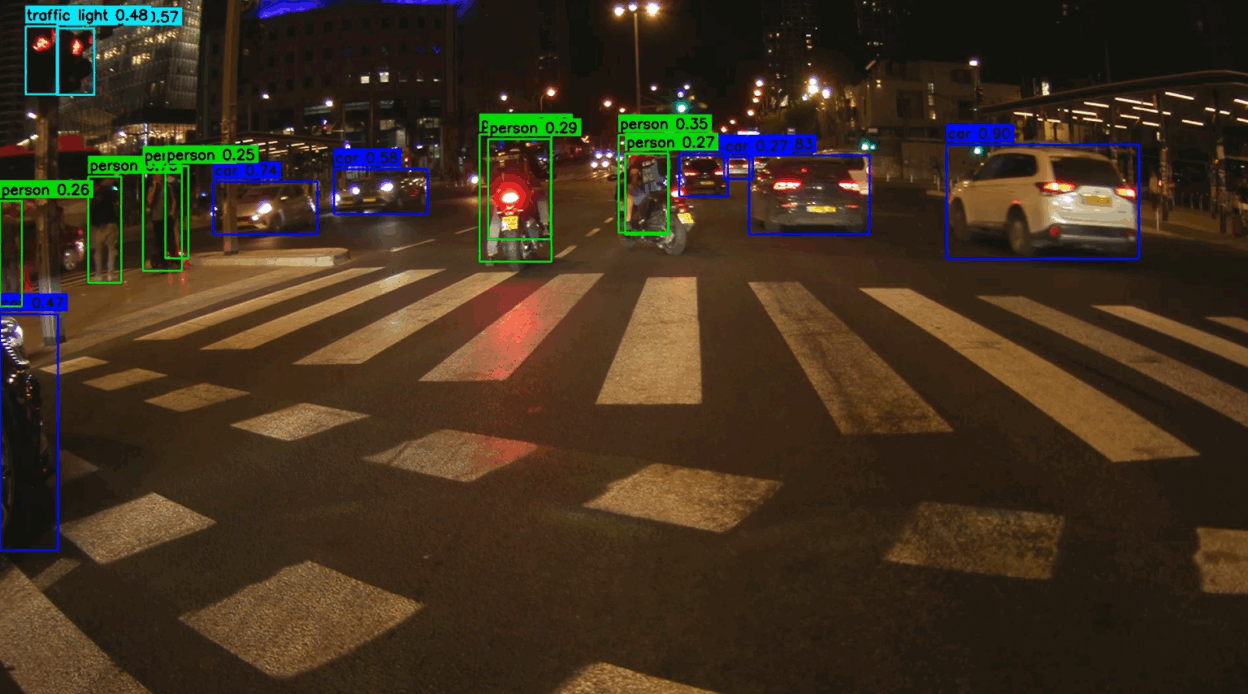

Beamr CABR Poised to Boost Vision AI

By reducing video size but not perceptual quality, Beamr’s Content Adaptive Bit Rate optimized encoding can make video used for vision AI…

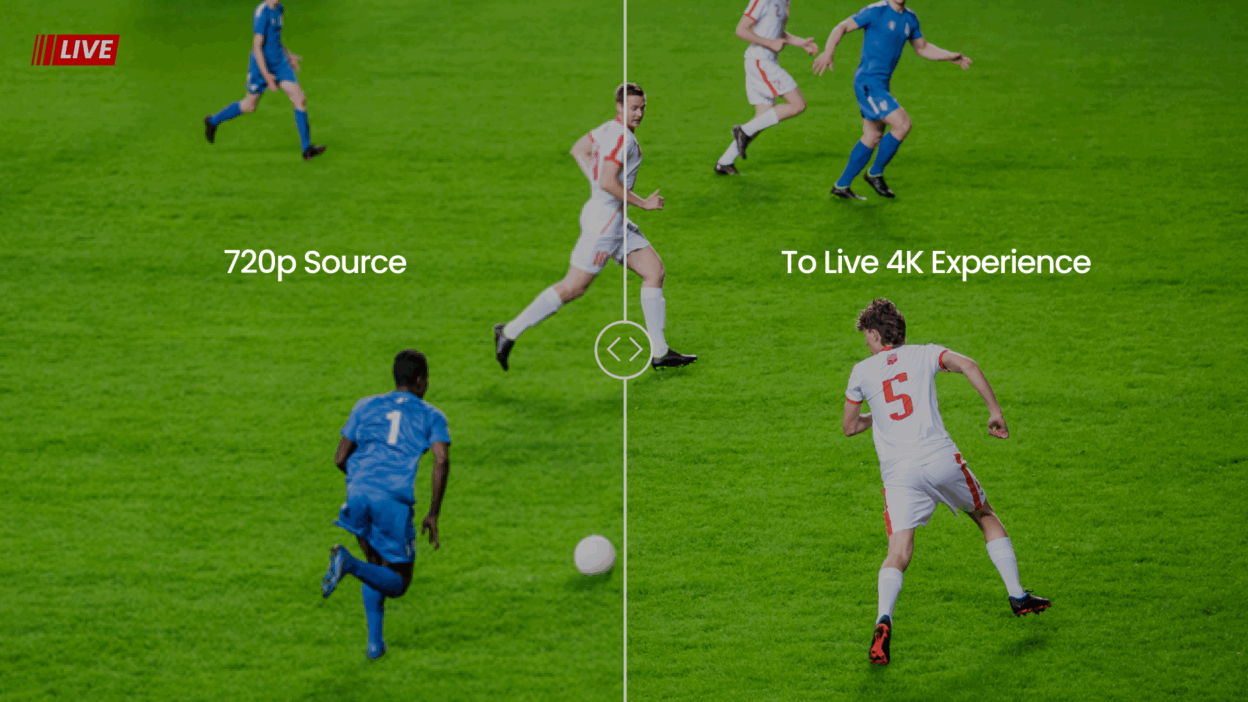

Automatically Upgrade Your Video Content to a New and Improved Codec

Easy & Safe Codec Modernization with Beamr using Nvidia GPUs Following a decade where AVC/H.264 was the clear ruler of the video…

Beamr Helps Businesses Keep Up With Generative AI Video Content

The proliferation of AI-generated visual content is creating a new market for media optimization services, with companies like Beamr well…

Beamr teams with NVIDIA to accelerate Beamr technology on NVIDIA GPUs

2023 is a very exciting year for Beamr. In February Beamr became a public company on NASDAQ:BMR on the premise of making our video…

Beamr Named Seagate Lyve Innovator of the Year 2021

We are thrilled to share with you that Beamr has won the Seagate Lyve Innovator of the Year competition!

Beamr Celebrates 50 Granted Patents

Receiving our 50th granted patent seemed like a good opportunity to reflect back on our IP journey, and share some lessons we learned along the way.

Adding Beamr’s Frame-Level Content-Adaptive Rate Control to the AV1 Encoder

In this post we present the results of integrating CABR's content-adaptive rate control with the libaom AV1 encoder. CABR can reduce AV1…

Optimizing Bitrates of User-generated Videos with Beamr CABR

In this blog post we present the results of a encoding a set of videos from YouTube’s UGC dataset with Beamr's CABR technology.

Video Codecs in 2020 – The Race is On!

Introduction There are several different video codecs available today for video streaming applications, and more will be released this year.…