Beamr AVC & HEVC Live Encoding Performance Milestones

It has been two years since we published a comparison of the two leading HEVC software encoder SDKs; Beamr 5, and x265. In this article you…

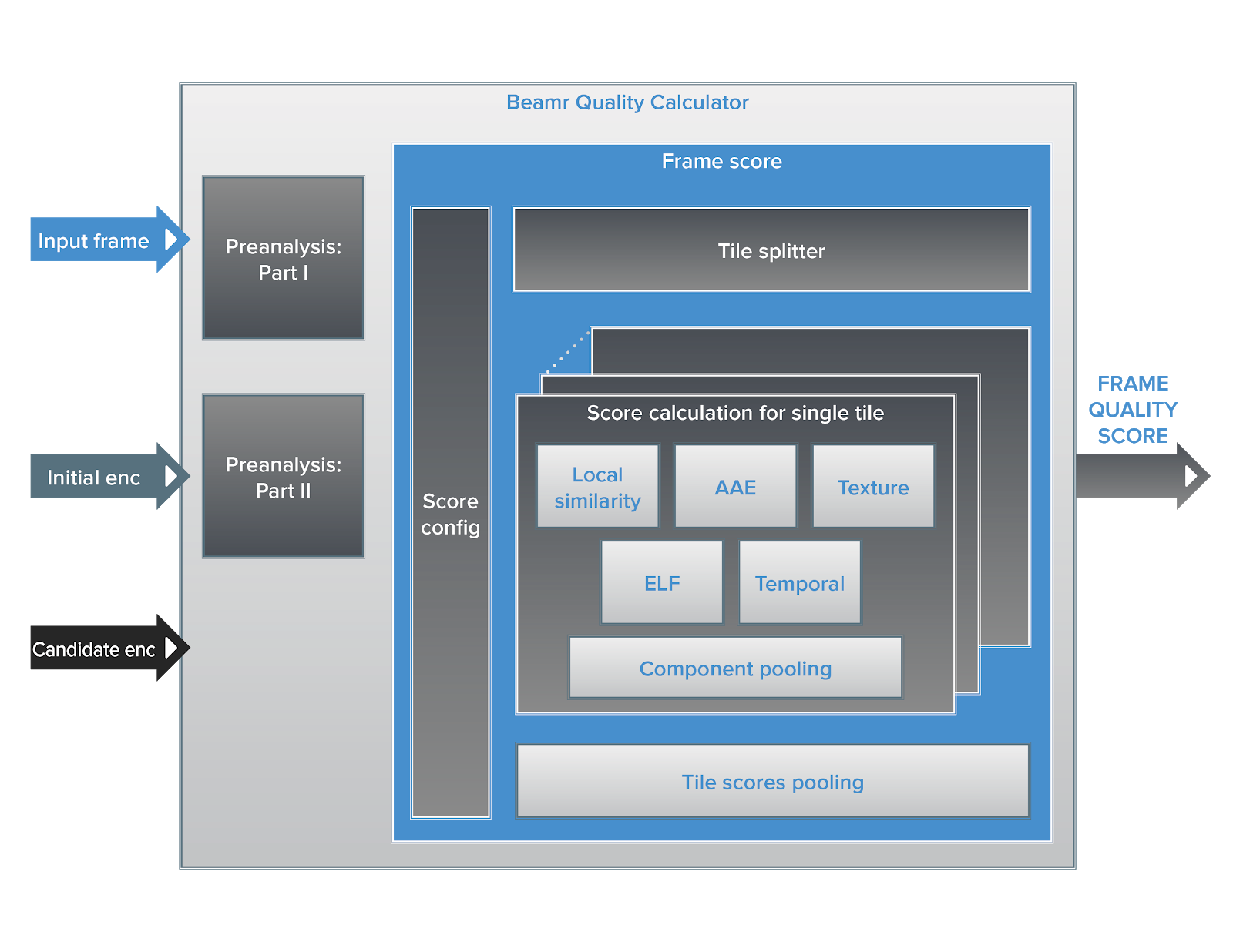

The Patented Visual Quality Measure that was Designed to Drive Higher Compression Efficiency

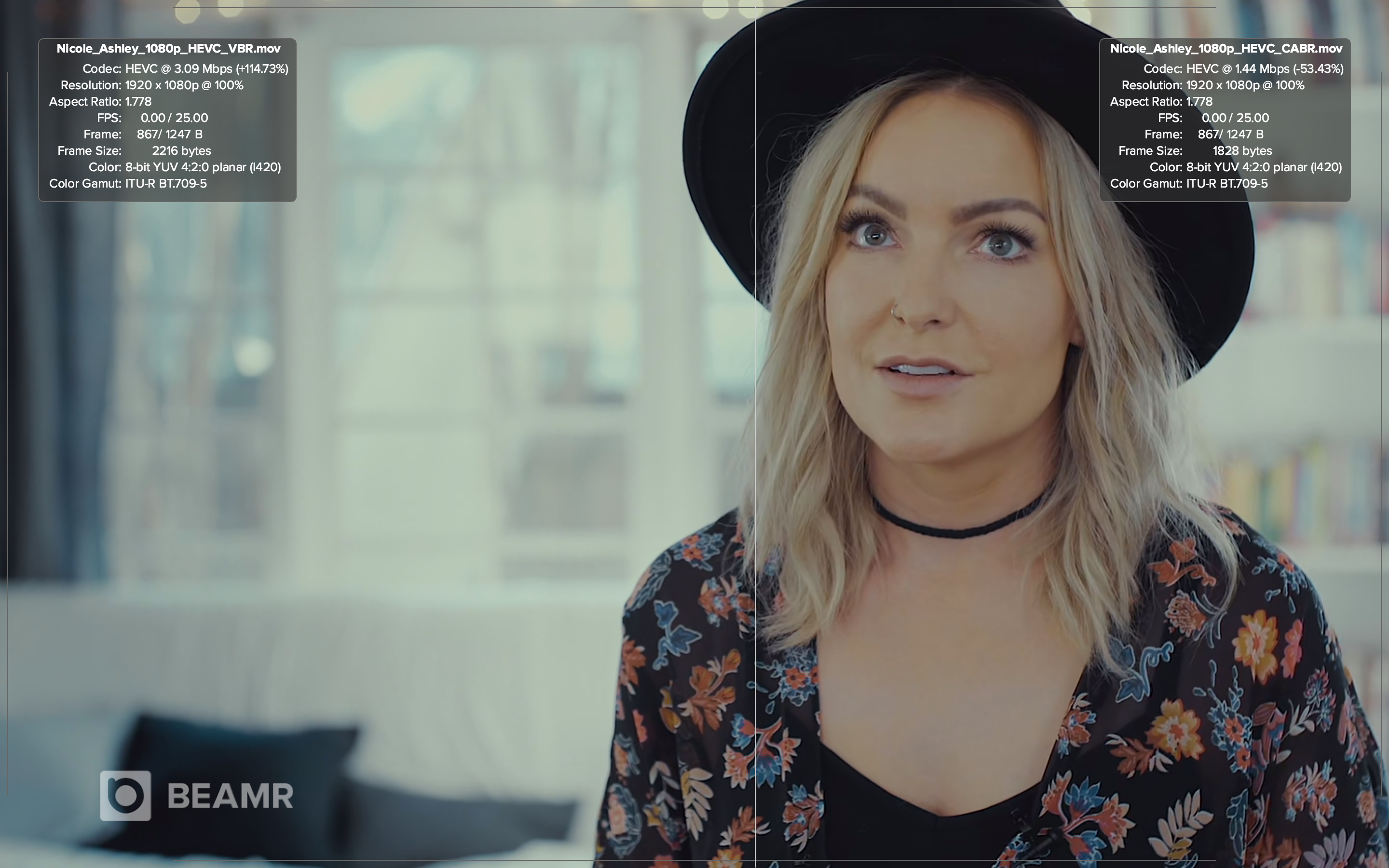

At the heart of Beamr’s closed-loop content-adaptive encoding solution (CABR) is a patented quality measure. This measure compares the…

A Deep Dive into CABR, Beamr’s Content-Adaptive Rate Control

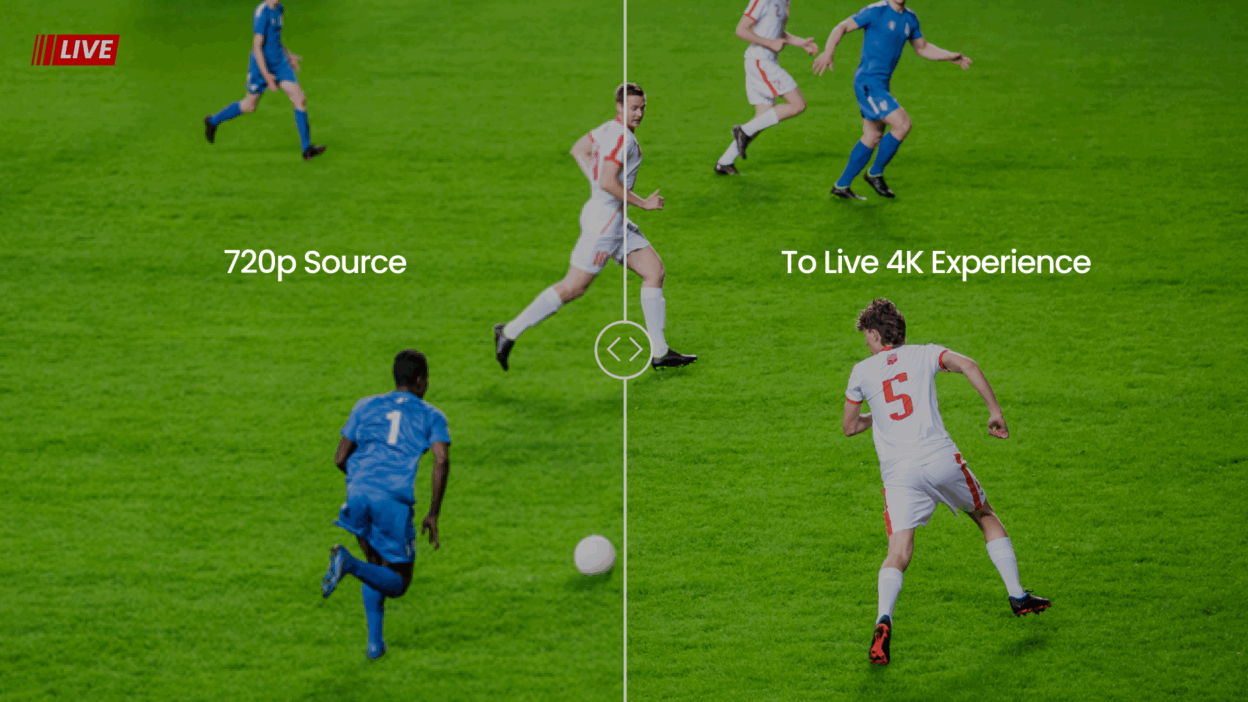

Going Inside Beamr’s Frame-Level Content-Adaptive Rate Control for Video Coding When it comes to video, the tradeoff between quality and…

They don’t collect baseball cards, but eSports super fans are giving traditional sports a run for their money.

For sports fans that grew up before the 90s, you likely have fond memories of collecting baseball memorabilia or going to your first game. To…

Why Game of Thrones is pushing video encoder capabilities to the edge

Game of Thrones entered its eighth and final season on April 14th, 2019. Though Game of Thrones has been a cultural phenomenon since the…

HEVC Bitrate Efficiency

Today, video streaming services must offer solutions that can evolve as the demand for content availability across a wide range of devices…

MPEG Through the Eyes of Its Chairman [podcast]

In “E08: MPEG Through the Eyes of Its Chairman,” The Video Insiders had the honor of sitting down with, Leonardo Chiariglione, the…

Microservices – Good on a Bad Day [podcast]

Live streaming is arguably the least forgiving industry in today’s market. Anyone involved with live streaming workflows understands the…

In the battle between open source & proprietary technology, does video win? [podcast]

Video engineers dedicated to engineering encoding technologies are highly skilled and hyper-focused on developing the foundation for future…

2018, the Year HEVC Took Flight. [podcast]

By now, most of us have seen the data and know that online video consumption is soaring at a rate that is historically unrivaled. …