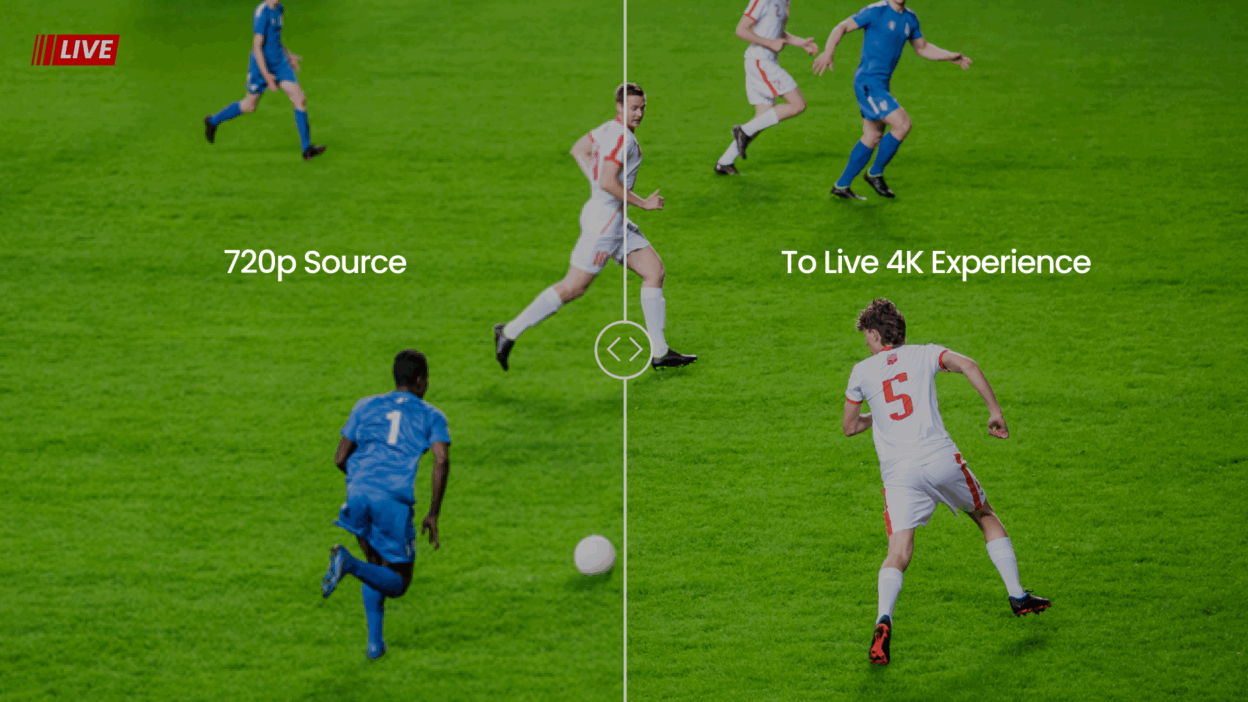

Joining Forces to Create a Better Streaming Experience

Streaming terabytes of video is complicated – and as expectations for high-quality video delivery increase daily, the undertaking only gets…

Why Closed-loop Perceptual Quality Measuring Beats All Other Optimization Methods

The market is heating up with solutions that claim to reduce the size of video files by impressive percentages, some up to 50%. But reducing…

Beamr @ IBC 2015

The Beamr team is already at the International Broadcasting Convention (IBC) in Amsterdam, setting up for a great show. This is where we can…

Over the Top: The War for TV is Heating Up in Q3

Video Compression Taking Center Stage TV is at a tipping point. Over the top, or OTT, is the industry term for video content delivered over…

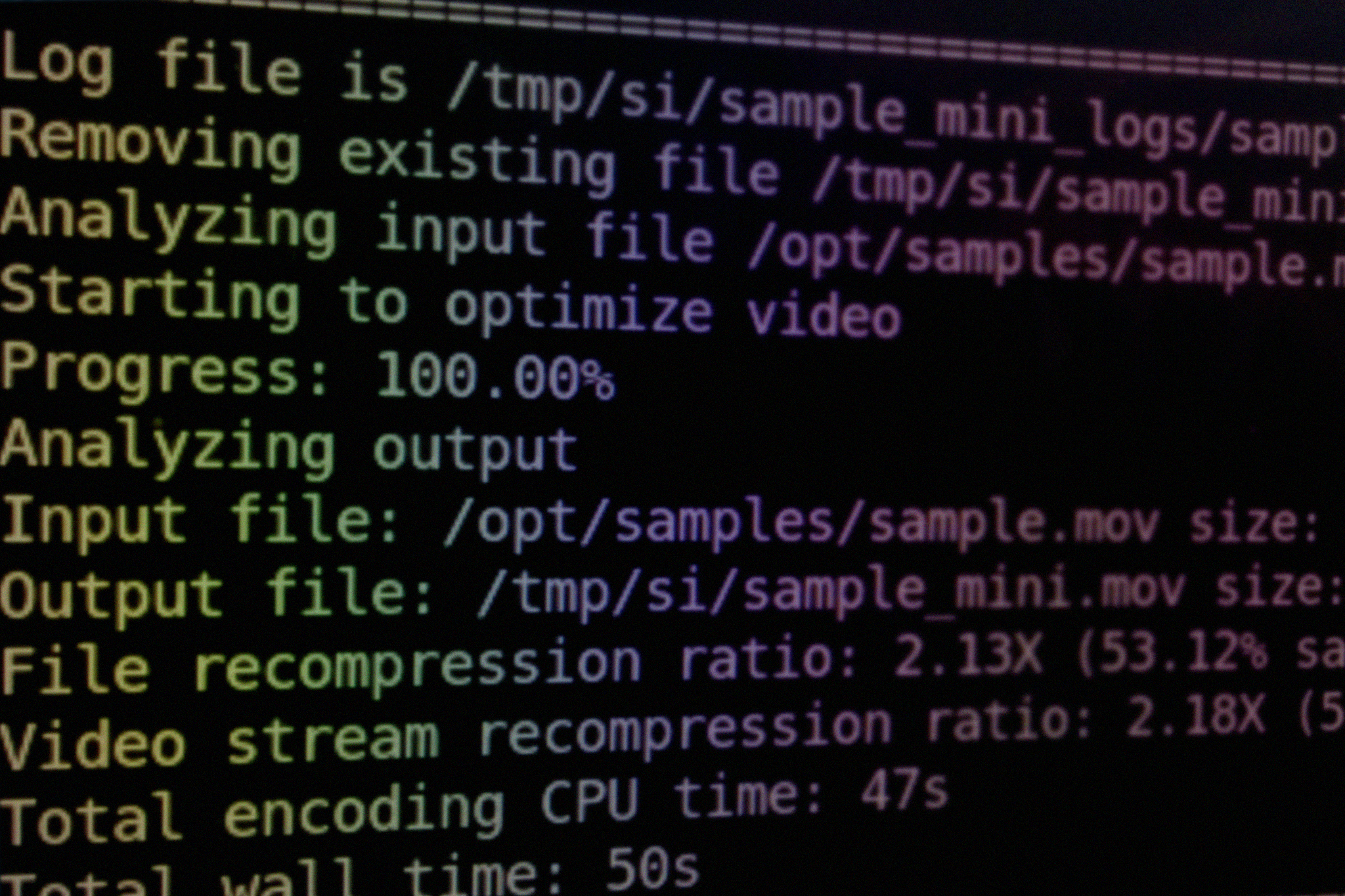

Integrating Media Optimization into a Video Processing Workflow

After introducing the concept of media optimization and understanding its value –improving viewing experience for streaming services, reducing…

Media Optimization: What’s In It for Me?

In last week’s post we introduced the concept of media optimization: taking an already-compressed media file and reducing the file size or…

What the Heck is Media Optimization?

Good question… If you search Google for Media Optimization, most of the results you find are for Social Media Optimization, the process of…

360-degree Videos Bring a New Cinematic Experience, but Create Delivery & Viewing Challenges

The movie industry has been around for over 100 years, but the cinematic experience hasn’t changed much: Basically, you watch the action…

Effects of a Crowded Net on Streaming Service ARPU

Do we have any hope of surviving the multi-screen, place shifting consumer? If you are reading this post then you already know that video has…

Introducing Beamr Blogger – Mark Donnigan

Hi, my name is Mark Donnigan. As the Vice President of Marketing at Beamr, I am responsible for the corporate communications, market…