Game of Thrones entered its eighth and final season on April 14th, 2019. Though Game of Thrones has been a cultural phenomenon since the beginning of its airing, the attention and eyeballs on these final episodes have been higher than ever. Right now, every aspect of season eight is under a microscope and up for discussion, from the battle of Winterfell to theories on the Azor Ahai prophecy, super fans are taking to the internet and social media to debate and swap theories. Yet, even if you’ve installed the Chrome extension GameOfSpoilers to block Game of Thrones news from popular social networks, you probably did not miss all the fans who flocked to social media to report their dissatisfaction with the poor quality of Season 8 Episode 3, “The Longest Night.”

CHECK OUT THIS PODCAST EPISODE

If nothing else that episode of #GameofThrones is proof positive that @Xfinity needs to start broadcasting in 4K pronto because the night scenes in that kind of looked like ass in their HBO broadcast. If I’m going to pay this much for cable I want better picture quality.

— Michael S (@TheMovieVampire) April 29, 2019

So can we talk about the bootleg quality of the war scenes or nah? #GameOfThrones

— Marquis Johns (@weaksauceradio) April 29, 2019

Though not all viewers experienced degraded visual quality for “The Longest Night”, a sufficiently high number did report a poor viewer experience, which triggered TechCrunch to write an article titled, “Why did last night’s ‘Game of Thrones’ look so bad?”

And TechCrunch wasn’t alone, The Verge also wrote an entire piece on how to setup your TV for a rewatch of “The Longest Night”, something that seems hardly possible. After all how is it possible that fans could need to rewatch an episode, not because the plot was so twisted or complicated that they needed a second pass at deciphering it, but because they couldn’t see what was happening on the screen? And in fact, Game of Thrones super fans were not shy in taking to Twitter with their quality assessments.

Why does this look so bad?

@HBO @GameOfThrones the picture quality tonight is absolutely terrible. All that time spent filming this beautiful episode and it looks like it’s running off a 1989 VHS tape…

— Chris Bohn (@CBohn83) April 29, 2019

Before you throw a Valyrian steel dagger at your TV, let’s take a close look at what happened to create this poor video quality by diving into the operational structure of the underlying video codecs that are used by all commercial streaming services.

The video compression schemes used in video streaming, including AVC which is also used by most PayTV cable and satellite distributors, utilize hybrid block-based video codecs. These codecs use block-based encoding methods which mean each video frame or picture is partitioned into blocks during the compression process, and they also apply motion estimation between frames. Though the effective compression that is made possible by these techniques is impressive, hybrid block-based compression schemes are inherently prone to creating blockiness and banding artifacts, which can be particularly evident in dark scenes.

Blockiness is a video artifact where areas of a video image appear to be comprised of small squares, rather than proper detail and smooth edges as the viewer would expect to see. The blockiness artifact happens when not enough detail is preserved in each of the coding blocks, resulting in inconsistencies between adjacent blocks and making each block appear separate from its neighbors. The video quality will suffer from blockiness when too much detail is lost within each block.

There are two main causes of blockiness. The first is when there is a mismatch between the content complexity and the target bitrate. It can be present either in highly complex content which is encoded at typical bitrates, or in standard content which is compressed to overly aggressive bitrates. Content providers can avoid this by using content adaptive solutions which match the encoder bitrate to the content complexity. The second cause of blockiness is from poor quality decisions made by the encoder, such as discarding information which is crucial for visual quality.

As noted by TechCrunch, for the specific Game of Thrones episode “The Longest Night”, the images are very dark and have a limited range of colors and brightness levels, basically between grey and dark grey. Encoding this limited range of grey shades, which filled up most of the screen, resulted in “banding” artifacts which is where there are visible transitions as a result of the video being represented by just a few shades of grey, which look like “stripes” instead of smooth gradients. Video suffers from banding when the color or brightness palette being used has too few values to accurately describe the shades present in part or all of the video frame.

The prevalent assumption even among some video engineers is that increasing bitrate is the cure-all to video quality problems. But as we’ll see in this case, it’s likely that even if the bitrate had been doubled, the systemic artifacts would still be present. Thus, the solution is not likely external to the video encoding process, but rather can only be addressed at the codec implementation level.

That is, the video encoding engine must be improved to prevent situations like this in the future. That, or HBO and other premium content owners could instruct their filmmakers to avoid dark scenes – We’ll stick with Option #1!

In this case, the video quality issues were not caused by the video encoding bitrate being too low. In fact, the bitrate used was more than sufficient to represent the limited range of colors and brightness. The issue was in the decisions made by the specific video encoder used by HBO. These are decisions regarding how to allocate the available bitrate, or how the bits should be used for different elements of the compressed video stream.

Without getting too deep into video compression technology, it is sufficient to say that a compressed video stream consists basically of two types of data.

The first type is prediction data which enables the creation of a prediction block from previously decoded pixels (in either the same or a reference frame). This prediction block acts as a rough estimate of the block and is complemented by the residual or error block, encoded in the stream. This is essentially a block that fills in the difference between the predicted block and the actual source video block.

The second type of data that is key to how a block-based video encoder works can be found in the rate-distortion algorithm which optimizes the selection of the prediction modes which the prediction data represents. This determines the level of detail to preserve in the residual block. The decisions are made in an attempt to minimize distortion, or maximize quality, for a specific bit allocation which is derived from the target bit-rate.

When a scene is very dark and consists of small variations of pixel colors, the numerical estimates of distortion may be skewed. Components of the video encoder including motion estimation and the rate-distortion algorithm should adapt to optimize the allocations and decisions for this particular use case.

For example, the motion estimation might classify the differences in pixel values as noise instead of actual motion, thus providing inferior prediction information. In another example, if the distortion measures are not tuned correctly, the residual may be considered noise rather than true pixel information and may be discarded or aggressively quantized.

Another common encoder technique that is affected and often “fooled” by very dark scenes is early terminations. Many encoders use this technique to “guess” what would be the best encoding decision they should make, instead of making an exhaustive search of all possibilities, and computing their cost. This technique improves the performance of the video encoder, but in the case of dark scenes with small variations, it can cause the encoder to make the wrong decision.

Some encoding engineers use a technique called “capped CRF” for encoding video at a constant quality instead of a pre-defined bitrate. This is a simple form of “content-adaptive” or “content-aware” encoding, which produces different bitrates for each video clip or scene based on its content. In some implementations, when this technique is used for dark scenes, it can also be “fooled” by the limited range of color and brightness values and may perform very aggressive quantization thus removing too much information form the residual blocks, resulting in these blockiness and banding artifacts.

In summary, we can conclude that dark scenes can lead to various encoding issues if the encoder is not “well prepared” for this type of content, and it seems that this is what happened with this Game of Thrones episode.

Better luck quality, next time.

Hey HBO, why is #GameofThrones in potato quality?

— Rabbit Raccoon (@PollyQPublic) April 29, 2019

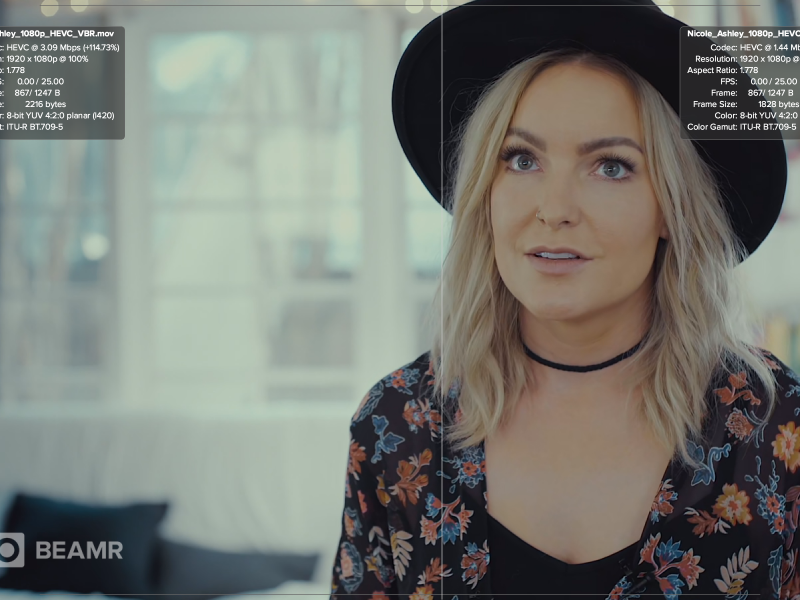

In order to ensure good quality video across different content types, the encoder must be able to correctly adapt to each and every frame being encoded. In Beamr encoders we tackle this with a combination of tools and algorithms to provide the best video quality possible.

Beamr encoders use unique, patented and patent-pending approaches, to calculate psycho-visual distortions to be used by the rate control module when deciding on prediction modes and on the bit allocations for different components of the compressed video data. This means that the actual visual impact of different decisions is taken into account, resulting in the improved visual quality of the content across a variety of content types.

Beamr encoders offer a wide variety of encoding speeds for different use cases, ranging from lightning fast to enable a full 4K ABR stack to be generated on a single server for live or VOD. When airing premium content of the caliber of Game of Thrones, one should opt for the maximum quality by using slower encoder speeds.

In these speeds, the encoder is wary of invoking early termination methods and thus does not overlook the data that may be hiding in the small deviations of the dark pixel values. We invest a huge effort to discover the optimal combinations for all the internal controls of the algorithm such as the most optimum lambda values for the different rate-distortion cost calculations, optimal values for the deblocking filter (and SAO in the case of Beamr 5 for HEVC) , and many other details – none of which are overlooked.

Rather than use a CRF like based approach for constant quality encoding, Beamr employs a sophisticated technique for content-adaptive encoding called CABR. The content-adaptive bitrate mode of encoding operates in a closed loop and examines the quality of each frame using a patented perceptual quality measure. Our perceptual quality measure is also specifically tuned to adapt to the “darkness” of the frame and each region within the frame, which makes it highly effective even when processing very dark scenes such as the “The Longest Night”, or fade-in and fade-out sequences.

Looking to the Future

For content providers, viewer expectations and demands for quality will continue to rise each year. A decade ago, you could slide by not delivering a consistent experience across devices. Today, not only is video degradation noticed by your viewers, it can have a massive impact on your audience and churn if you’re not delivering an experience inline with their quality expectations.

To see what high quality at the lowest bitrate should look like, try Beamr Transcoder for free or contact our team by sending an email to sales@beamr.com to learn about our comparison tool Beamr View.